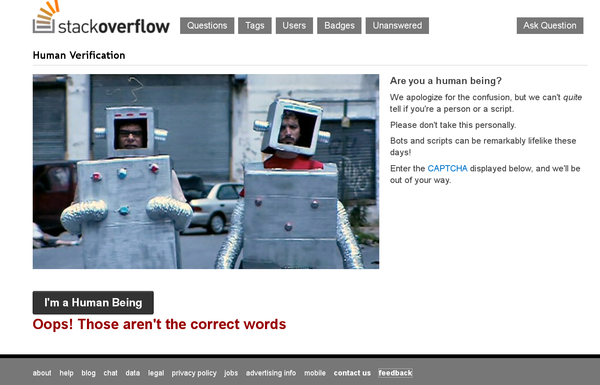

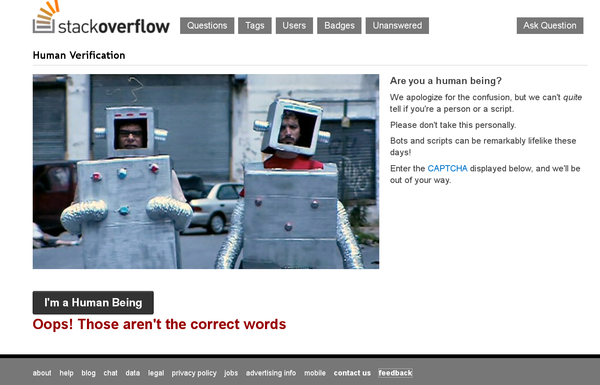

Fun With Captchas

I generally understand why they are there. But not always... see for yourself:

Can you solve that?!?

I generally understand why they are there. But not always... see for yourself:

Can you solve that?!?

I have been asked how the various pieces in a Debian source package

relate to those in a corresponding binary package. For the sake of

simplicity, I'll start with a one-source -> one-binary packaging

situation. Let's choose worklog, a primitive task timer, as our

sample package.

The simplest way to get started is to pick a simple package and get the source. In an empty directory, do this:

$ apt-get source worklog Reading package lists... Done Building dependency tree Reading state information... Done Skipping already downloaded file 'worklog_1.8-6.dsc' Skipping already downloaded file 'worklog_1.8.orig.tar.gz' Skipping already downloaded file 'worklog_1.8-6.debian.tar.gz' Need to get 0 B of source archives. dpkg-source: info: extracting worklog in worklog-1.8 dpkg-source: info: unpacking worklog_1.8.orig.tar.gz dpkg-source: info: unpacking worklog_1.8-6.debian.tar.gz dpkg-source: info: applying ferror_fix.patch dpkg-source: info: applying makefile_fix.patch dpkg-source: info: applying spelling_fix.patch

It will pull down the source package's parts and unpack and patch it. The result looks like this:

$ ls worklog-1.8 worklog_1.8-6.debian.tar.gz worklog_1.8-6.dsc worklog_1.8.orig.tar.gz

The various parts are:

./debian subdirectory inside of the actual working dirworklog-1.8: The ready-made project dir containing upstream's

code, the ./debian subdirectory, and with all patches applied.

worklog_1.8-6.dsc: This is the file holding it all together, with package description, checksums, a digital signature etc.

The contents of the unpacked source package looks like this:

$ cd worklog-1.8

$ ls

.pc Makefile README TODO debian projects

worklog.1 worklog.c worklog.lsm

We are now going to examine the contents of the ./debian

subdirectory, which contains the packaging instructions and assorted

data:

$ ls .pc changelog compat control copyright dirs docs patches rules source

The ubiquitious .pc subdirectories are created by quilt, a patch

management application used by this package. They won't leave a trace

in the binary package.

Above, in the listing of the project dir, you see the two files

README and TODO. Now compare with the contents of docs:

$ cat docs README TODO

These files, alongside the copyright, will end up in the package's

documentation directory, in this case /usr/share/doc/worklog. Also

in the documentation directory, you will find a copy of the

changelog:

$ ls /usr/share/doc/worklog/ README TODO changelog.Debian.gz copyright examples

The control file represents the information about which packages are

going to be created, and the rules file specifies the actions which

are to be performed for actually building the package. Also very

relevant for this task are compat, which specifies the debhelper

API to use, source/format, which specifies the source package format

(see http://wiki.debian.org/Projects/DebSrc3.0 for details), and the

patches subdirectory, containing any required patches. Usual cases

for patches are eg. to adjust hardcoded paths to Debian standards,

implement security fixes, or other required changes that can't be done

via the rules file. Look:

$ ls patches ferror_fix.patch makefile_fix.patch series spelling_fix.patch

The series file contains an ordered list of patches and is used to

specify which patches to apply, and in which order. In this example,

the makefile_fix.patch corrects such a hardcoded path.

If things are getting more complicated, the debian subdirectory also

contains things like init scripts (which then end up in

eg. /etc/init.d), and possibly manually crafted scripts like

postinst, which are executed by the package management

software. These, along with other administrative files, end up in

/var/lib/dpkg/info. In our example, there are only two files:

$ ls /var/lib/dpkg/info/worklog.* /var/lib/dpkg/info/worklog.list /var/lib/dpkg/info/worklog.md5sums

The worklog.list file contains one entry per file for the whole

directory tree, including directories, while the worklog.md5sums

file contains checksums for every regular file in the package. See,

for comparison, the same listing for mdadm (a popular software raid

implementation for Linux):

$ ls /var/lib/dpkg/info/mdadm.* /var/lib/dpkg/info/mdadm.conffiles /var/lib/dpkg/info/mdadm.config /var/lib/dpkg/info/mdadm.list /var/lib/dpkg/info/mdadm.md5sums /var/lib/dpkg/info/mdadm.postinst /var/lib/dpkg/info/mdadm.postrm /var/lib/dpkg/info/mdadm.preinst /var/lib/dpkg/info/mdadm.prerm /var/lib/dpkg/info/mdadm.templates

Here, the mdadm.config works together with debconf to create an

initial configuration file, and the *.{pre,post}{inst,rm} scripts

are designed to trigger such configuration, to discover devices etc,

and to start or stop the daemon, and do some cleanup afterwards.

This should get you started on Debian's package structure. For further information, I recommend http://www.debian.org/doc/manuals/maint-guide/ and generally, http://wiki.debian.org/DebianDevelopment .

Enjoy!

Dear Author,

recently I had a discussion with a publisher where I asked for a translation of a popular non-fiction book from English into German, and offered to create it. The background for this discussion was that there are certain works, which are most relevant to their field and not easily, or even usefully, replaced, nor re-created, but which set the "gold standard" in their respective fields. Re-doing such a work is usually futile and amounts to throwing away the already-accumulated knowledge, and makes it harder for people to quote a common reference to each other. If only - in this example - an English language edition of the book exists, one can expect that people with little command of the English language will not benefit from such a book and, as a consequence, may not get into the field covered by the book, have a harder than necessary time acquiring proficiency, and/or may opt out of the field completely, turning, or staying with possible alternative technology, for which sufficient coverage in their native language is being provided.

To return to the original topic, the publisher claimed that maintaining translations was too much of a burden to them, but also declined to support, or authorize, or sub-license, content in order for me to create a translation. I can understand these arguments, as a translation will either create cost, if the publisher does it himself, or possibly (imho marginally) lost opportunity, if someone else publishes a competing book - and a licensed translation is bound to eat into the sales figures of the original book. So at first glance, immediate greed suggest that such - let's say - "derivative works" must be shot down.

However, I strongly disagree with this position, as I think the general disemination of knowledge must not be artificially restricted. In my opinion, the publisher does not only have a moral obligation to satisfy obvious market demands, but that not doing so is against the interests of both the original author of the book, as well as the - in this case - user community at large, who are factually excluded from a significant part of knowledge. The adverse effect on the author is easy to see: His name will not be known as widely as it could be, and his book sales will hit an upper bound rather sooner than later, thus directly limiting his potential revenue. For the users, the adverse effect is also obvious: Being cut off a knowledge pool requires more investment into one's own research, thus driving up cost for the topic covered in the book. And for the supplier side (where I am located), this also creates a problem, as it impedes user adoption and thus contributes to limiting the market share.

I therefore ask authors to please double-check your contracts, or the contracts proposed to you, to either force the publisher to supply translations upon request and in a timely manner, or to grant reasonable licenses for third parties to create and publish such translations, or to exclude translations from coverage, so you are free to contract someone else to create such a translation if you deem them to be useful, and in the event that publishers demand complete control over your work, that you go with a different publisher who does not require you to sign such an adhesion contract.

Thank you!

I recently started to read about responsive web design, and quickly hit the image problem. This can be summarized as "How to deliver the correct images for a given device?"

There are a lot of approaches using JavaScript user agent sniffing to tackle this problem, but none of these appears to be satisfactory. I also came across this proposal of the W3C Responsive Images Community Group. Regarding past problems in browser implementation, I am not at all enthusiastic about having a new tag with complicated semantics to support in browsers' code bases.

I suggest this approach, although they say that attempts at changing

the processing of <img /> tags was shot down by vendors (why? please

educate me):

Use a new meta tag for images or other media in the same way than "base", only with different values for different types. Eg:

<meta type="base-img" condition="CSS3 media selector statement" url="some url" /> <meta type="base-video" condition="CSS3 media selector statement" url="some url" />

That should mean: Load images from a different base URL than

ordinary stuff if their src is relative, and videos from

another URL. Expand this scheme to cover all interesting things

(<object />, <video />, ...).

In effect, this should construct a tree for accessing resources, that the browser can evaluate as it wants to.

The idea is that web site owners can pre-compute all desirable images

and stuff, and place them where they find things convenient, and that

browsers don't have to do much to actually request them. I expect

these additional benefits over the suggested <picture /> tag off the

top of my head:

<meta /> tags per page, not the rather verbose <picture />

tag.<img /> tag in any way.In case a <meta /> statement for a given tag would not be present,

the browser would fall back to the next best resource type. I suggest

this hierarchy:

<meta /> tag: Same - keep the existing behaviour.base-img as the value to the type attribute <meta

/>: Use this for all heavy objects.base-video as the value: Use this for videos, but

continue to use other methods to find images.(nil) (base) (img) (object) (video) ....Yes, I am very late to the discussion, but would still like your input and pointers to relevant arguments. Thank you!

License of this text: CC-BY-NC-NA 3.0

A while back, Geert van der Spoel made a public statement about how to run Interchange on nginx, a very fine webserver which I like and use since a few years ago.

Geert's notes got me on track to finally attempt to abandon Apache for our Interchange hosting as well, and, although in a hurry, I converted my website to a similar scheme. As the way Geert interfaces to the Interchange stuff seemed too complicated to me, and since we do not have much traffic, I thought that there should be an easy way. Therefore, instead of creating a Perl-based FastCGI launcher, I simply perused the provided link programs, which I then start using fcgiwrap (thanks, Grzegorz!). Also, in contrast to earlier deployments, I wanted to stay within standard system configurations and packaging, too. Our URL configuration is such that all URLs go to Interchange, except for static images and such.

The relevant part of our nginx configuration now looks like this (Debian host):

# standard Debian location for Interchange location ^~ /cgi-bin/ic { root /usr/lib; fastcgi_pass unix:/var/run/fcgiwrap.socket; # this stuff comes straight from Geert: gzip off; expires off; set $path_info $request_uri; if ($path_info ~ "^([^\?]*)\?") { set $path_info $1; } include fastcgi_params; fastcgi_read_timeout 5m; # name your shop's link program: fastcgi_index /cgi-bin/ic/shop1; fastcgi_param SCRIPT_NAME /cgi-bin/ic/shop1; fastcgi_param SCRIPT_FILENAME /usr/lib/cgi-bin/ic/shop1; fastcgi_param PATH_INFO $path_info; fastcgi_param HTTP_COOKIE $http_cookie; }

Enjoy!

Aus Zeitmangel weise ich heute nur kurz auf das geplante Leistungsschutzrecht hin, das enormes Schadpotential hat, indem es gleich mehrere grundsätzliche Mechanismen, wie Webseiten funktionieren, und wie man im Internet zusammenarbeitet, de facto abschaffen würde - wenn es kommt, was für den Herbst diesen Jahres vorgesehen ist. Zu den abzuschaffenden Mechanismen gehören mindestens

Das Zitat wird deswegen de facto verboten, weil fast jedes Weblog nach dem derzeitigen Stand als "gewerblich" gelten dürfte, und damit sind Zitate aller Art und jeder Länge im Prinzip lizenzpflichtig und damit abmahnfähig.

Das Verlinken wird dem Zitat gleichgestellt und ist daher aus den gleichen Gründen kaum noch tragbar. Nur welchen Sinn haben Webseiten, die nicht verlinken, und die auch nicht mehr verlinkt werden?

Meine Bewertung dieser Gesetzesinitiative lautet wie folgt:

Das Leistungsschutzrecht öffnet kommerziell motivierter Zensur Tür und Tor und macht, unter anderem, Weblogs zu einem unkalkulierbaren finanziellen Risiko.

Selbst dieses Weblog hier, welches kaum Inhalte hat, scheint nach meiner bisherigen Einschätzung betroffen zu sein, und Weblogs, die weniger autistisch geführt werden, dürften sich gleich ganz erübrigen, weil die Betreiber einfach nicht darauf verzichten können, einen fremden Text zu zitieren. Fragen Sie sich selbst: Wie oft finden sich Artikel der Art "XY hat das hier gesagt: Zitat..., aber ich meine dazu Folgendes: ...". Und was wäre das Internet, wenn dort nicht mehr in derartiger Weise diskutiert werden könnte?

Weiterführender Link:

Heute lese ich, daß Fedora einen Mini-Bootloader mit Microsoft-Zertifikat ausliefern will, so daß man Fedora 18 gemeinsam mit Windows 8 auf einem Computer betreiben kann.

Wie schon an vielen anderen Stellen erklärt wurde, hat diese spezielle Form von Sicherheit einen erheblichen Nachteil für alle Benutzer:

Man kann nur noch signierte Software booten.

Das bedeutet im Klartext:

Unter dem Strich kann man das Problem so zusammenfassen:

Sie werden enteignet, indem Ihnen die Kontrolle über Ihren PC entzogen wird.

Das intendierte Verfahren stellt daher nicht weniger als einen Verrat (mindestens) seitens RedHat und Fedora gegenüber dem Rest der Linux-Gemeine dar, da der Erpressung seitens Microsoft nur gemeinsam mit Aussicht auf Erfolg entgegengetreten werden kann. Es siegt also vermutlich die größere Kriegskasse, nicht die bessere Technik und die bessere Zusammenarbeit, wie es bislang in der Szene der Freien Software bislang üblich war.

Außerdem werden damit für jeden Computer, der unter Linux läuft, regelmäßig Zahlungen an Microsoft fällig, wenn der Computer weiterlaufen soll (derzeit sind $99,- für jede Signierung, etwa für Sicherheitsupdates erforderlich). Muß man Microsoft die Kontrolle über den Preis, eine Linux-Maschine zu betreiben, in die Hand drücken?

Lassen Sie uns dieser Enteignung gemeinsam entgegentreten (Englisch).

Links:

FSFE (Deutsch)

Noch in den Achtzigern waren die ISO-Jungs, die uns von X.500, dem großen Bruder von LDAP, zu dessen Betrieb allerdings mindestens ein kleiner Mainframe erforderlich war, X.400, wo man damals schon Emails für 50 Pfennig oder so - pro á 10kB - an Leute mit so schönen Adressen wie etwa c=US;a=;p=PoseyEnterprises;o=SouthCarolina;s=Posey;g=Brien verschicken konnte, und ähnlich nützlichen, vor allem aber teuren, Dingen vorgeschwärmt haben, der Meinung, das Internet sei nur ein kleines Strohfeuer, das bald im selbst verursachten Chaos untergehen würde. Wie wir alle wissen, fand diese Einschätzung damals in der Bevölkerung keine Mehrheit, so daß X.400 und Co. heute nur noch in Nischenanwendungen zu finden sind, wo es eben auf Geld nicht unbedingt ankommt. Diesem Chaos muß natürlich irgendwie entgegengewirkt werden, und auch im Internet müssen wieder Zucht, Ordnung, und das obligatorische Zoll- und Mauthäuschen aufgebaut werden. Es ist ja bekanntermaßen ein Unding, daß eine Mail nach Übersee genauso billig wie eine ins Nachbardorf ist, und daß jemand so einfach eine Emailadresse nach eigenem Gusto einrichten kann, statt sich diese - zumindest damals nur in bestimmten Formen, die den Wohnort und/oder Arbeitgeber des Antragstellers genau bezeichnen - erst beantragen und beglaubigen lassen zu müssen, das kann ja unmöglich ein Dauerzustand sein. Oder etwa doch?

Jedenfalls hat die Organisation, die damals diese hervorragenden Vorschläge zur strukturierten Kommunikation erarbeitet hat, kürzlich einen neuen Anlauf zur Eindämmung des Chaos in der Datenkommunikation unternommen und jetzt erklärt, daß sie allein prädestiniert sei, die Aufsicht über das Internet zu übernehmen. Und natürlich, daß dies eine eilige Angelegenheit sei, die keinen weiteren Aufschub dulde.

Dieser Einschätzung kann ich mich ganz und gar nicht anschließen, sondern ersuche die Leser dieser Zeilen darum, sich stattdessen möglichst gegen derartige Vorschläge (siehe Linkliste unten) zu engagieren, denn sonst ist der Tag, an dem man auch im Internet endlich Roaming-Gebühren nach Art von Handy-Telefonaten zahlen darf, dafür aber öfter eine Seite mit Stopschild - natürlich nur zu unser aller Sicherheit - zu sehen bekommt, nicht mehr weit.

Danke!

Hier sind ein paar Links von anderen Leuten, die schneller als ich waren, ohne jede Wertung:

It looks like several people are severely unhappy with HTC's attitude towards ICS on their Vision device - at least, I am, and so are a number of petitioners on the web site mentioned below. Unfortunately, the fine folks at Cyanogenmod don't seem to make any progress, either, but at least, petitioning could be more focussed. So let's at least unify all petitions with that concern to build enough thrust:

Can we do the math to say that there are a total of more than 4.500 users to support this issue, and that nothing is to be gained if those are all spread across different petitions?

Ok, maybe there are duplicates (likely), but I'd say that awareness really should be raised.

And FWIW, vendors who don't at least don't stand in the way of easy updates by third parties should not get further sales. Imagine you could not update your PC because the vendor locked the device down to make it (too) hard for you.

Der diesjährige "World Plone Day" findet am 25.4.2012 statt. Ich bin fest entschlossen, an der diesjährigen Veranstaltung in Bonn teilzunehmen. Das dortige Hochschulrechenzentrum von meiner alten Alma Ata richtet diese Veranstaltung gemeinsam mit dem Python Software Verband aus, es ist zwischen 15:00 Uhr und 20:00 Uhr geöffnet.

Nähere Veranstaltungshinweise finden Sie hier: http://rheinland.worldploneday.de/